From Data to Knowledge: Building a Single Source of Truth

· 5 min read

“Without being able to analyse data comprehensively and systematically, policing won’t be as efficient as it can be. [State of Policing - The Annual Assessment of Policing in England and Wales 2019]”

Embarking on a journey to revolutionise law enforcement through advanced data analysis, we proudly present the first instalment in this series. This opening post sets the stage for a discussion on how unstructured data can be skilfully converted into a coherent, insightful and powerful resource. As we proceed with this series, expect to uncover a wealth of knowledge that underpins the enhancement of policing methods. Join us at the vanguard of policing technology as we delve into the transformative power of Knowledge Graphs.

The issue of data analysis and management fundamentally challenges the realm of law enforcement and policing. The “State of Policing - The Annual Assessment of Policing in England and Wales 2019” underscores the inefficiency in policing that arises without systematic data analysis. Step one of tackling this issue is the transition from isolated data silos to a holistic intelligence data system. This involves the arduous task of integrating multiple heterogeneous data sources, each with unique data models and access mechanisms, into a cohesive and searchable whole. The complexity of this process is compounded by the unstructured nature of some data types, like textual reports, which can make investigations both time-consuming and susceptible to errors.

Relatedly, a police force can achieve success by deploying an impressive array of state-of-the-art data collection and analysis tools. Digital forensics, powered by advanced software and platforms capable of extracting vast amounts of data from devices such as phones, tablets, and PCs, exemplifies this remarkable technological progress. These tools have accumulated a significant repository of valuable data and information housed in specialised systems and databases. Nevertheless, a pressing challenge remains: how to enable analysts to efficiently search and analyse these disparate data repositories.

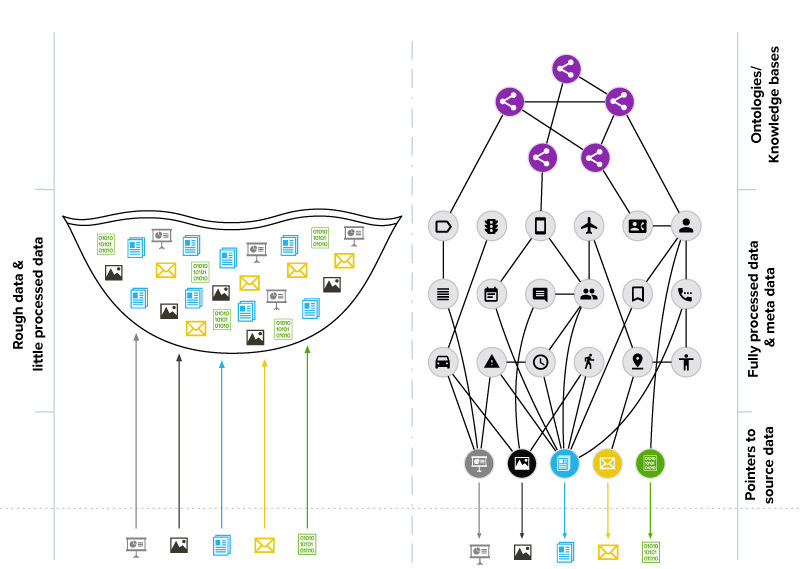

The typical approach of aggregating data into a central “Data Lake” in raw format does not sufficiently address the problem. It fails to adhere to the FAIR principles, which emphasise findability, accessibility, interoperability, and reusability of data. Instead, the solution lies in the implementation of knowledge graphs. These graphs create a semantic layer over the data collection, ensuring the meaningful connection and machine-processability of the data.

Building the Foundation: From Raw Data to Knowledge Graphs”

The journey in our proven process begins with ingesting data into knowledge graphs, which may have different schemas based on the requirements. This process involves key subtasks such as extraction, normalisation, matchmaking and connection, and entity resolution.

The process of ingesting data into knowledge graphs begins with extraction, involving the retrieval of data from diverse sources. This stage tackles the challenge of varied data formats. The subsequent normalisation phase standardises the data, aiming for consistency and comparability amidst the complexities of different data types.

Then, the matchmaking and connection phase identifies and links relationships between data points, striving to create an interconnected dataset. The main challenge is accurately linking data from disparate sources. Lastly, entity resolution is crucial for discerning when different entries pertain to the same entity, key for removing duplicates and consolidating information.

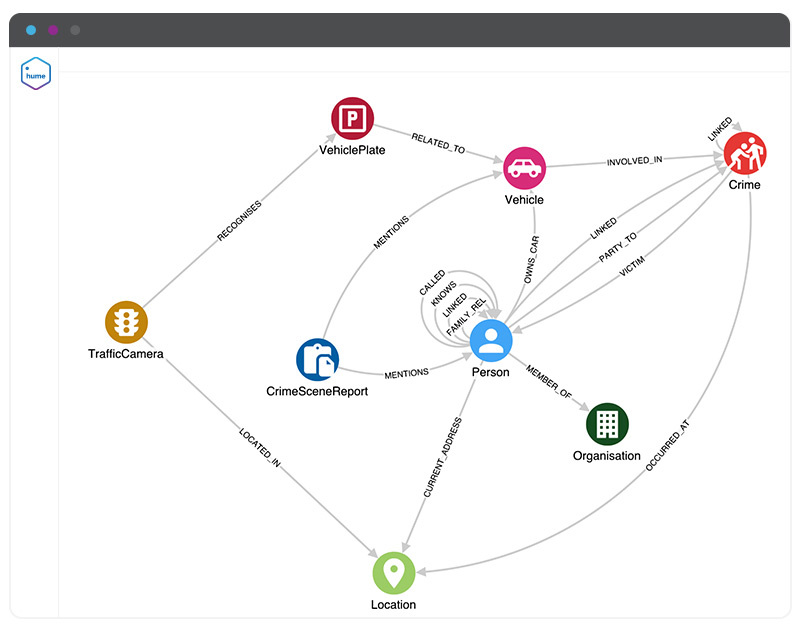

Each phase is pivotal in converting the chaos of unstructured or semi-structured data into a structured format for knowledge graphs, thereby enhancing the precision and efficiency of data analysis. The following image illustrates an example of our knowledge graph with explorable interconnected nodes and relationships.

The knowledge graph thus becomes a foundation for a single source of truth, respecting FAIR principles and providing a single, cohesive structure for data analysis. It is noteworthy that the transformation from raw data to a knowledge graph is not merely a transfer; it involves significant effort to enhance the quality and comprehensibility of the data. This foundational work supports the second step in our process: the transition from simple search to complex exploration.

Enhancing Criminal Intelligence: Knowledge Graphs for Advanced Data Exploration and Insightful Investigations

Criminal Network Analysis requires the assimilation and contextual analysis of various data types. Knowledge graphs enable this by facilitating advanced queries that interlink disparate data points, such as connecting a crime victim to associated individuals, their vehicles, and subsequent sightings by traffic cameras. The graph exploration approach allows analysts to navigate through a web of data, following their investigative intuition and hypotheses, supported by visual aids that map the paths of their inquiry.

This method of exploration streamlines the analytical process, providing quicker, more accurate results than conventional search methods. It’s a shift from the manual, error-prone efforts of piecing together evidence to a more agile, efficient, and insightful investigation process.

Optimising the time analysts devote to data processing directly influences their effectiveness and the impact on their community. Consider a hypothetical scenario: a police force has 50 analysts, each working 200 days annually, conducting an average of 20 queries daily to resolve cases. While the actual figures may be higher, for illustrative purposes, assume that an efficient access mechanism in place reduces the time for each query by just 1 minute. This reduction, although modest, can significantly accelerate the process, especially considering the usual need for manual data search and combination.

50 analyst x 200 days/year x 20 queries/day x 60 secs/query ~ 416 working days

It is the work delivered by two people in one year! So it’s like having 2 more analysts in your team for free. The adoption of knowledge graphs not only improves the speed and quality of criminal intelligence but also acts as a force multiplier, effectively adding more analytical capacity to the team.

In conclusion, the knowledge graph approach offers a paradigm shift in data management and exploration for law enforcement agencies. By adhering to FAIR principles and enabling a more efficient investigative process, knowledge graphs represent a significant advancement over traditional data aggregation methods, such as Data Lakes. As we progress, the subsequent steps will delve into leveraging knowledge graphs for even more advanced objectives, further empowering law enforcement with robust, actionable intelligence.

Stay at the forefront of law enforcement technology with GraphAware. Watch for our upcoming blog series on predictive policing, where we delve deeper into how knowledge graphs are shaping the future of crime prevention. Stay tuned, learn with us, and transform your data into foresight. Contact GraphAware to be part of this cutting-edge journey.